A previous article of mine laid out the basics of my theory on the different grades of automation and technology at large. A topic as complex as this one (no pun intended!) requires much deeper explanation and a more in-depth expression of thought. Thus, I will dedicate this particular post towards expanding upon these concepts.

Technist thought dictates that all human history can be summarized as “humans seeking increased productivity with less energy”. Reduced energy expenditure and increased efficiency drives evolution— the “fittest” Herbert Spencer mentioned in 1864 as being the key for survival is not defined by intelligence or strength, but by efficiency. Evolution as a semi-random phenomenon leads to life-forms that expend the least amount of energy in order to maximize their chances at reproduction in a particular environment. This is usually why species go extinct— their methods of reproduction are not as efficient as they could be, meaning they’re wasting too much energy for too little profit. When a new predator or existential threat arises, what may have been the most efficient model before becomes obsolete. If this animal does not adapt and evolve quickly enough— finding a new way to survive and becoming able to do so efficiently enough so as to not use all their food too quickly— their genes die off permanently.

The universe itself seeks the lowest-energy state at all possible opportunities, from subatomic particles all the way to the largest structures known to science.

If we were to abandon the chase for greater efficiency, we’d effectively damn ourselves to utter failure. This isn’t because things are inevitable, but because of the nature of this chase. It’s like running across a non-Newtonian liquid— you need to keep running because the quick succession of shocks causes the liquid to act as a solid and, thus, you can keep moving forward. If you were to at any point slow or stop your progression, the liquid will lose its solid characteristics and you will sink.

This is how real life works. If you’re scared of sinking, the time to second guess crossing the pool of non-Newtonian liquid was before you stepped on it. Except with life, we don’t have that option— we have to keep moving forward. If we regressed, the foundations of our society would explode apart. Even if we were to slow ourselves and be more deliberate in our progress, the consequences could be extremely dire. So dire that they threaten to undo what we’ve done. This is one reason why I’ve never given up being a Singularitarian, despite my belief that it will not be an excessively magical turning point in our evolution, or based on the words of those who claim that we should avoid the Singularity— it’s too late for that. If you didn’t want to experience the Singularity, then curse your forefathers for creating digital technology and mechanical tools. Curse your distant siblings for reproducing at such a high rate and necessitating more efficient machines to care for them. Curse evolution itself for being so insidious as to always follow the path of least resistance.

Efficiency. That’s the word of the day. That’s what futuristic sci-tech really entails— greater efficiency. Things are “futuristic” because they’re, in some way, more efficient than what we had in the past. We approach the Singularity because it’s a more efficient paradigm.

For us humans, our evolution towards maximum efficiency began before we were even human. Humanity evolved due to circumstances that led to a species of hominid finding an incredibly efficient way to perpetuate its genes— tool usage. Though we are a force of nature with only our bare bodies, without our tools we are just another species of ape. Tools allowed us to more efficiently hunt prey. Evidence abounds that australopithecines and paranthropus were likely scavengers who seldom used what we’d recognize as stone-age tools. They were prey— and in the savannas of southeast Africa, they were forced to evolve bipedalism to more efficiently escape predators and use their primitive tools.

With the arrival of the first humans, Homo habilis and Homo naledi, we made a transition from prey to predator ourselves. Our tools became vastly more complex due to our hands developing finer motor skills (resulting in increased brain-size). To the untrained eye today, the difference between Homo habilis tools and Australopithecus afarensis tools are negligible. Where it matters was how they made these tools. So far, there’s little evidence to suggest that australopithecines ever widely made their own tools; they found stubble and rocks that looked useful and used them. Through millions of years of further development (perhaps validating Terrence McKenna’s Stoned Ape theory?), humans managed to actively machine our own tools. If a particular rock wasn’t useful for us, we would make it useful by turning it into a flinthead or a blunt hammer. We altered natural objects to fit our own needs.

This is how we made the transition from animal of prey to master predator and eventually reached the top of the food chain.

However, evolution did not end with the arrival of Homo habilis and early manufacturing. Our tool usage allowed us to do much more with much less energy, and as a result of our improving diets, our bodies kept becoming more efficient. Our brains grew so that we’d be able to develop ever-more advanced tools. The species with the best tools worked the least and thus needed the least amount of food to survive— one well-aimed spear could drop a mammoth. The archaic species who used simpler tools had to do more work, requiring greater amounts of food across smaller populations. Australopithecines couldn’t keep up with their human cousins and went extinct not long after we arrived. Their methods of hunting were primitive even by the standards of the day— as aforementioned, they were a genus of scavengers more than they were hunters. They lacked the brainpower to create exceedingly complex tools, meaning that they were essentially forced to choose between throwing rocks at mammoths or waiting for them to die off of other causes— sometimes that cause being humans killing one and losing track of it.

Human species diverged, with some evolving to meet the requirements of their new environments— Neanderthals and Denisovans evolving to sustain themselves in the harsher climates of Eurasia, while the remaining Erectus and Heidelbergensis/proto-Sapiens populations remained in Africa. We all developed sapience, but circumstances doomed all other species besides ourselves, the Sapiens. We still don’t quite understand all the circumstances that led to the demise of our brother and sister humans, but it’s most likely due to increased competition with ourselves as we spread out from Africa. Neanderthals lasted the longest, and all paleoarchaeology points to the idea that they were actually more advanced tool creators than ourselves at the time. Alas, the environments in which they evolved damned them to more difficult childbirth and, thus, lower birthrates, which proved fatal when they were finally forced to face ourselves. Sapiens evolved in warm, sunny, and tropical Africa, which had plentiful food and easy prey. Childbirth for ourselves became easier as our children were born with smaller brains that grew with age. Neanderthals evolved in cold, dark Eurasia, where food was much more difficult to find. This meant that their populations had to be smaller than our own just so they could actually survive, lest their overpopulate themselves and consume all possible prey too soon and doom themselves to a starved extinction. Of course, this also meant that they had to be more creative than we did since their prey were often more difficult to kill and harder to come across.

Though we interbred over the years, they finally died out around 30,000 years ago, leaving only ourselves and one mysterious species—the soon extinct Homo floresiensis— around. We had no competition but ourselves and our brains had reached a critical mass, allowing us to create tools of such high complexity that we were soon able to begin affecting the planet itself through the rise of agriculture.

Again, to ourselves, these tools seem cartoonishly primitive, but if a trained eye compared a Sapiens’ tool circa 10,000 BC to an Australopithecus tool circa 2.7 million BC, they would find the former to be infinitely more skillfully created.

When the last ice age ended, all possible threats to our development faded, and our abilities as a species skyrocketed.

Yet it still took another 7,000 years for us to begin transitioning to the next grade of automation.

All this time, through all our evolutionary twists and turns, each and every species and genus mentioned above only ever used Grade-I automation.

You only need one person to create a Grade-I tool, though societal memetics and cultural transmission can assist with developing further complexity— that is, learning how to create a tool using methods passed down over generations of previous experimentation.

Let’s use myself as an example. If you threw me out into the African savanna to reconnect me with my proto-human ancestors, you would watch me struggle to survive using tools that are squarely Grade-I in nature. Some often joke about how, if they were sent back in time, they would become living gods because they would recreate our magic-like modern technology. As I will explain in my discussion of Grade-III automation, that’s bullshit. I could live in the savanna for the rest of my days, and never will I be able to recreate electric lights or my Android phone. I will, however, be capable of creating predatory tools and basic farming equipment. I will be able to create wheels and sustain fire, and I will be able to create shelter.

These things are examples of Grade-I automation. I don’t use my hands to farm for maize; I use farm tools. I don’t use my hands to kill animals; I use weapons. If I spend my life practicing, I could create some impressive tools to ease the burden of labor. The maximum amount of energy needed to create all the tools I need to survive come from food. The most advanced tool created requires no energy beyond what I expend to make it work. Society, if it exists, needs little more than food and sunlight to fuel itself.

That’s Grade-I automation in a nutshell: I am all I need. Others can assist, but my hands fill my stomach. I create and understand all my tools. I understand that, when I create a scythe, it’s to cut grass. When I create a wheel, it’s to aid in transporting items or myself. When I create clothes, it’s just for me to wear.

At the end of this evolution, Grade-I automation allows one to create an entire agrarian civilization. However, while our tools became greatly complex, they were still in the same grade as tools used by monkeys, birds, and cephalopods. As our societies became ever more complex, our old tools were no longer efficient enough to support our need for increased productivity. Our populations kept rising, and civilizations became connected by more threads of varying materials. You couldn’t support these societies just with hand-pushed plows, spears, and sickles. And because of this, society required tools that took more than just one hand and one mind to create.

Grade-II automation finally arrives when we require and create complex machines to keep running society. Here, cultural transmission begins becoming diffused. My society began with just myself, but now there are multiple people living in a little city of mud-huts we’ve created. However, over time, our agrarian collectives begin producing more than enough food for us to subsist upon. The population of my personal civilization creeps upward. We begin considering new ways to produce more food with fewer hands to support this higher population— simply putting seeds in the ground and slaughtering cattle isn’t good enough. Those that generate the biggest surpluses are able to trade their goods for others to use, transactions that result in the creation of money as a medium of exchange in order to make the whole system more efficient. There’s an incentive to generate even bigger surpluses to sell, and this requires more labor than society can provide— despite our increasing population. We need more labor but if we increase our population, we’ll need more goods, which means we’ll need more labor. Without Grade-II automation, we’ll become trapped in a cycle of perpetuated poverty. But we will always seek out increased efficiency and productivity because we naturally seek the expenditure of the least amount of energy as possible. If we were to keep our traditional ways, we’d be acting irrationally and endangering our own survival as a species.

In order to create labor-saving devices for the workers to use, we needed specialized labor. Not everyone could create these tools— the agrarian society would collapse without peasants and farmers— and even if they did, there’s a new problem: these new tools require several hands to create. Certain materials are better to use than others. Iron is superior to wood; bronze is more useful for various items than stone. However, if I were tasked with creating these new, futuristic tools, I’d be stumped. I was raised to be a farmer. If I were trained to create a mechanical plow, I’d still be stumped— how on Earth do I create steel, exactly? Where does one get steel? How does a clockwork analog computer work? How did the Greeks create the Antikythera mechanism? I don’t know! How does one create a steam engine? I don’t know! I could learn, but I could be responsible for all of it myself. I need help. I could create the skeleton of a farming mechanism, but I need someone else to machine the steel teeth of this beautiful plow. I need someone to refine the iron needed to create steel. I need someone to mine the iron.

In a society that’s beginning to create early Grade-II technologies, specialization is fast becoming a major problem that needs rectification. The way to rectify it is with mercantilism and globalism. Naturally, the “global” economy of my society isn’t very global in practice. There are multiple countries that bring me what I need, but usually what I need can be created in my own nation by native hands. I just need to train those native hands and let some practice these new trades to figure out how to better create the tools and gadgets they need to use and sell.

This paleoanthropological discussion became unexpectedly socioeconomic in nature, but that’s the nature of our evolution. The evolution of automation and tool usage is directly related to the evolution of humanity just as it is directly related to the evolution of social orders and economic systems.

In my basic article introducing the graded concept, I mentioned what a properly advanced Grade-II society would look like: something akin to the 1800’s, right up to and including the point when our tools become electrically powered.

Grade-II tools are too complex for any one person to create and fully understand, but if you had a small team’s worth of people, it becomes more than possible. Thus, you’re able to employ more people while also producing a surplus of goods. It takes only one hand to craft a hoe (don’t start), but it takes many hands in many places to construct a tractor. Productivity skyrockets, and one becomes capable of supporting exponentially larger populations as our systems of agriculture, industry, and economic activity become more efficient. I have more surpluses I use to employ others, and I can give surpluses back to those I employ, allowing more surpluses to be made all around.

Millions of jobs are made as machines require specialized labor to oversee different parts of their usage— refinement of basic materials, construction of the tool itself, maintenance of the tool, discarding broken parts, etc.

But one basic factor to remember in all this— every machine requires a human brain to work, even if machine brawns can do the work of 50 men. Even if I have a proper and practical Rube Goldberg machine as a tool, it still requires myself to run.

In the 1700’s and 1800’s, machines underwent an explosion of complexity thanks to the usage of electricity and radically new manufacturing methods. Ever since the early days of civilization, we had learned to harness the power of mechanical energy to use in our machines— energy greater than what a single person could put out. By the times the Industrial Revolution exploded onto the scene, we had begun using electrical generation to do what even simple mechanical power could never achieve. Electricity allowed us to move past mere mechanical resistance and achieve far greater than break-even industrial production.

It used to be that 50 people produced enough goods for 50-55 people to consume— essentially making everything sustenance-based. Over time, this slowly increased as more efficient production methods came about, but there was never any quantum leap in productivity. But with the Industrial Revolution, all of a sudden 50 people could create enough goods to meet the needs of 500 or more.

More than that, we began creating tools that were so easy to use that unskilled laborers could outproduce the most skilled for generations prior. This is what wrought the Luddites— contrary to popular belief, the Luddites feared the weakening of organized skilled labor and the depression of wages; it just happened that machines were the reason why skilled laborers faced such an existential threat. After all, while specialization was needed to create these new tools, one didn’t actually need to be a genius to operate them. Thus, the Luddites saw only one solution to solve the problem: destroy the machines. No machines, no surplus of unskilled labor, no low wages.

The Luddites’ train of thought was on the right path, but they completely overlooked the possibility that the increased number of low-skill low-wage laborers would lead to a higher demand of high-skill high-wage laborers to maintain these machines and create new ones. Overall, productivity would continue increasing all around and even more people would become employed.

The Luddites’ unfounded fears have historically become codified as what economists have come to refer as the Luddite Fallacy— when one fears the possibility of new technology leading to mass unemployment. Throughout history, the exact opposite has always proven true, and yet we keep falling for it.

Certainly, it’ll always prove true, right? Well, times did begin to change as society’s increased complexity required even more specialized tools, but in the end, the feared mass unemployment of all humans has not occurred and did not occur when some first expected it to do so. That moment was the arrival of Grade-III automation.

Grade-III automation is not defined by being physical, as Grade-II was. In fact, it is with this grade that cognitive processes began being automated. This was a sea change in the nature of tool usage, as for the first time, we began creating tools that could, in some arcane way, “think.”

Not that “think” is the best word to use. A better word might be “compute”. And that’s the symbol of Grade-III automation— computers. Machines that compute, crunching huge numbers to accomplish cognitive-based tasks. Just by running some electricity through these machines, we are able to calculate processes that would stump even the most well-trained humans.

Computers aren’t necessarily a modern innovation— abacuses have existed since Antiquity, and analog computing was known even to the Greeks, as aforementioned. Looms utilized guiding patterns to automate weaving. But despite this, none of these machines could run programs— humans were still required to actively exert energy to use these processes. Later electrical analog computers were somewhat capable of general computation (the first Turing-complete computer was conceptualized in 1833), but for the most part, they were nowhere near as capable as their digital counterparts due to not being reprogrammable.

Digital computers lacked the drawbacks of analog computers and were so incredibly versatile that even the first creators could not fathom all their uses.

With the rise of computers, we could program algorithms to run automatically without supervision. This meant that there were tools we could allow computers to control, tools that were previously only capable of being run by humans. For most tools, we didn’t digitally automate all processes— an example of this: cars. While the earliest cars were purely mechanical in nature and required the utmost attention for every action, more recent automobiles possess features that allow them to stay in lanes, keeping speed (cruise control), automatic driving in certain situations (autopilot), and even full autonomy (though still experimental). Nevertheless, all commercial cars still require human drivers. And even when we do create fully autonomous commercial vehicles, their production won’t be fully automated. Nor will their maintenance.

And here’s where specialization simultaneously becomes more and less important than ever before.

Grade-III automation requires more than just a small group of people to create. Even advanced engineers and veritable geniuses cannot fully understand every facet of a single computer. The low-skilled workers fabricating computer chips in Thailand can’t begin to understand how each part of the chips they’re creating work together to form personal computers. All the many parts of a computer chip come together to form the apex of technological complexity.

In my personal civilization, I can’t create a microprocessor in my bedroom. I don’t have the technology, and I don’t know how to create that technology. I need others to do that for me; no single person that I employ will know how to create all parts of a computer either. Those who design transistors don’t know how to refine petroleum to create the computer tower, and the programmer who designs the many programs the computer runs won’t know how to create the coolant to keep the computer running smoothly. Not to mention, the programmer is not the only programmer— there are dozens of programmers working together just to get singular programs to run, let alone the whole operating system.

Here is where globalism becomes necessary for society to function. Before, you needed more than one group of person to create highly complex tools and machines, but to create Grade-III automation, it truly is a planetary effort just to get an iPad on your lap. You need more than just engineers— you need the various scientists to actually come up with the concepts necessary to understand how to create all these many technologies.

Once it all comes together, however, the payoff is extraordinary, even by the standards of previous experiences. Singular people are able to produce enough to satisfy the needs of thousands, and businesses can attain greater wealth than whole nations. The amount of labor needed to create these tools is immense, but these machines also begin taking up larger and larger bulks of this labor. And because of the sheer amount of surpluses created, billions of jobs are created, with billions more possible. We can afford to employ all these people because we’re created that much wealth.

I don’t need to understand the product I sell, nor do I need to create it; I just need to organize a collective of people to see to its production and sales. We call these collectives “businesses”— corporations, enterprises, cooperatives, what have you.

Society becomes incredibly complicated, so complicated that whole fields of study are created just to understand a single facet of our civilization. Naturally, this leads to alienation. People feel as if they are just a cog in the machine, working for the Man and getting nothing out of it. And true, many business owners and government types are far, far less than altruistic, often funding conflicts and strife in order to profit from the natural resources needed to create tools to sell more goods and services. Exploitation is not just a Marxist conspiracy; it’s definitely real. Whether it’s avoidable is another debate entirely— socialist experiments and regimes across the world have been tried, and they’ve only exacerbated the same abuses they claimed to be fighting. Merely changing who owns the means of production, changing who owns the machines doesn’t change the fact that the complex nature of society will always lead back to extreme alienation.

I buy potato chips for a salty snack. I had absolutely nothing to do with the creation of these chips. Even if I were part of a worker-owned and managed commune that specialized in the production of salty snacks, I didn’t grow the potatoes or the corn flour, nor did I create the plastic bags, nor did I create the flavoring. And I especially had nothing to do with the computerized assembly line.

I own the means of production collectively alongside my fellow workers and the members of my community (essentially meaning everyone and no one actually owns the machines), but I still feel alienated. The only way to end alienation would be to create absolutely every tool I use, grow everything I need to eat, and create my own dwelling. If I didn’t want to feel any alienation whatsoever, that means I cannot use anything that I (or my community) did not create. The assembly line uses steel that was created thousands of miles away, meaning I cannot use it. The hammer I use to fix the machine is made out of so many different materials— metals, composites, etc.— that I don’t even want to begin to try to understand all the labor that went into creating it, just that it was probably made in China. The chips? I might purchase one batch of spuds, but after that, I want nothing to do with other communities whose goods and services were not the result of my own labor— otherwise I’d just feel alienated from life. Salt cannot be used if we cannot find it; same deal with the flavoring. And if I can make bags from animal skin or plants, then only then will I have a bag to hold these chips.

This is an artificial return to using Grade-I and maybe a few Grade-II tools. Grade-III is simply too global. Of course, while this is a utopian ideal that’s popular with eco-socialists and fundamentalists, the big issue (which I discussed earlier) is that we no longer exclusively use Grade-I and II tools for a specific reason— our population is too large and our old methods of productive were too inefficient. The only way to successfully manage a return to an eco-socialist utopia would be if we decreased the human population by upwards of 75-80%. Otherwise, if you think our current society is wasteful and damaging to Earth, prepare to be utterly horrified by how casually 7.5 billion sustenance farmers would rape the planet. If we increased efficiency by too much (i.e. enough to support such a large population that we’ve forced upon ourselves), you’d have to scrap plans to end alienation and return to creating at least the more complex parts of Grade-II automation.

If you’re willing to accept alienation, then we will continue onwards from what you have now.

We will continue seeking efficiency. We will continue seeking more productivity from less labor. As Grade-III technologies become more efficient, workers need less and less skill to utilize the machines, which further opens up an immeasurable amount of jobs to be filled.

I feel I should pause here to finally address energy production and consumption. This is what drives our ever increasing complexity in society, as without greater amounts of energy at it’s disposal, even a society of supergeniuses could not kickstart an industrial revolution.

Our tools require ever more power, and the creation of the means of generating this power in turn results in us requiring more power.

Once upon a time, all of human society generated little more than a few megawatts globally. As aforementioned, Grade-I relied purely on human and animal muscle, with virtually nothing else beyond fire and the direct effects of solar power.

From EnergyBC:

For all but a tiny sliver of mankind’s 50,000 year history, the use of energy has been severely limited. For most of it the only source of energy humans could draw upon was the most basic: human muscle. The discovery of fire and the burning of wood, animal dung and charcoal helped things along by providing an immediate source of heat. Next came domestication, about 12,000 years ago, when humans learned to harness the power of oxen and horses to plough their fields and drive up crop yields.2The only other readily accessible sources of power were the forces of wind and water. Sails were erected on ships during the Bronze Age, allowing people to move and trade across bodies of water.3Windmills and water-wheels came later, in the first millennium BCE, grinding grain and pumping water.4These provided an important source of power in ancient times. They remained the most powerful and reliable means to utilize energy for thousands of years, until the invention of the steam engine.Measured in modern terms, these powerful pre-industrial water-wheels couldn’t easily generate more than 4 kW of power. Wind mills could do 1 to 2 kW. This state of affairs persisted for a very long time:”Human exertions… changed little between antiquity and the centuries immediately preceding industrialization. Average body weights hardly increased. All the essential devices providing humans with a mechanical advantage have been with us since the time of the ancient empires, or even before that.”5

With less energy use, the world was only able to support a small population, perhaps as little as 200 million at 1 CE, and gradually climbing to ~800 million in 1750 at the beginning of the industrial revolution.

Near the end of the 18th century, in a wave of unprecedented innovation and advancement, Europeans began to unlock the potential of fossil fuels. It began with coal. Though the value of coal for its heating properties had been known for thousands of years, it was not until James Watt’s enhancement of the steam engine that coal’s power as a prime mover was unleashed.

The steam engine was first used to pump water out of coal mines in 1769. These first steam pumps were crude and inefficient. Nevertheless by 1800 these designs managed a blistering output of 20 kW, rendering water-wheels and wind-mills obsolete.6

Some historians regard this moment as the most important in human history since the domestication of animals.7 The energy intensity of coal and the other fossil fuels (oil and natural gas) absolutely dwarfed anything mankind had ever used before. Many at the time failed to realize the significance of fossil fuels. Napoleon Bonaparte, when first told of steam-ships, scoffed at the idea, saying “What, sir, would you make a ship sail against the wind and currents by lighting a bonfire under her deck? I pray you, excuse me, I have not the time to listen to such nonsense.”8

Nevertheless, the genie was now out of the bottle and there was no going back. The remainder of the 19th Century saw a cascade of inventions and innovations hot on the steam engine’s heels. These resulted from the higher amounts of energy available, as well as to improved metalworking (through the newly-discovered technique of coking coal).

In agrarian societies, untouched by industrialization, the population growth rate remains essentially zero.9 However, in the 1700 and 1800s, these new energy harnessing technologies brought about a farming, as well as an industrialization revolution, profoundly changing man’s relation to the world around him. Manufactured metal farm implements, nitrogen fertilizers, pesticides and farm tractors all brought crop yields to previously unbelievable levels. Population growth rates soared and these developments enabled a population explosion in all industrialized states.

Grade-II’s final stage begot the energy-hungry electrodigital gadgets of Grade-III technology, and enhanced efficiency has brought us to a point in history where we’ve come close to maximizing the efficiency of this current automation grade.

A society that has mastered the creation and usage of Grade-III automation will resemble a world we’d consider to be “near-future science fiction.” It’s still beyond us, but not by much time.

Computers possess great levels of intelligence and autonomy— some will even be capable of “weak-general artificial intelligence”. Nevertheless, it’s not the right time to start falling back on your basic income. Jobs are still plentiful, and new jobs are still being created at a very high rate. We’ve essentially closed in on the ultimate point in economics, something I’ve come to dub “the Event Horizon”.

This is the point where productivity reaches its maximum possible point, where a single person can satisfy the needs of many thousands of others through the use of advanced technology. Workers are innumerable, and one’s role in society is very specially defined.

It seems like we’re on the cusp of creating a society straight out of Star Trek. We wonder about what future careers will be like— will our grandkids have job titles like “asteroid miner” or “robot repairman?” Will your progeny become known in the history books as legendary starship captains or infamous computer hackers? What kind of skills will be taught in colleges around the world; what kind of degrees will there be? Will STEM types become a new elite class of worker? Will we begin creating digital historians?

Well right as we expect a sci-fi version of our world to appear, it all collapses.

Grade-IV automation is such an alien concept that even I have a difficult time fully understanding it. However, there is a very basic concept behind it: it’s the point where one of our tools becomes so stupidly complex that no human— not even the largest collective of supergeniuses man has ever known— could ever create it. It’s cognitively beyond our abilities, just as it’s beyond the capability of Capuchin monkeys to create and deeply understand an iPhone. This machine is more than just a machine— it is artificial intelligence. Strong-general artificial intelligence, capable of creating artificial superintelligence.

It takes the best of each previous grade to reach the next one. We couldn’t reach Grade-II without creating super-complex versions of Grade-II tools. We couldn’t hope to reach Grade-III automation without mastering the construction of so many Grade-II tools.

As with all other grades (but as will feel most obvious here), there’s absolutely no way to reach Grade-IV technology without reaching the peak of Grade-III technology. At our current point of existence, attempting to create ASI would be the equivalent of a person in early-medieval Europe attempting to create a digital supercomputer. Of course, this may be the wrong attitude to take— it took billions of years to reach Grade-II, and less than four thousand to reach Grade-III. Grade-IV could arrive in as few as five years, or as distant as a century from now— but few believe it’s any more than that. Often these beliefs follow a pattern— for some, they believe it’ll arrive right around the time they’re expected to graduate college so as to mean that they will not have to work a day in their lives— they’d just get a basic income for living instead and they’d have no obligations to society at large beyond some basic and vague expectation to be “creative”. For others, ASI is not going to appear until conveniently long after they’ve died and no longer have to deal with the consequences of such a radical change in society, usually predicated on the argument that “there’s no historical evidence that such a thing is possible”— an argument one would believe has less than no bearing considering all the many things that had no historical evidence for being possible before their own inventions, but, naturally, becomes perfectly reasonable in the minds of technoskeptics. The discourse between these two sides has degenerated into little more than schadenfreude-investment between those desiring a basic income (where automation is the only historical reason for large-scale unemployment) and those holding onto conservative-libertarianism (where automation is not and may never be an actual issue).

Nevertheless, all evidence points to the fact our machines are still growing more complex and will reach a point where they themselves will become capable of creating tools. This point will not be magical— it’s mere extrapolation. At some point, humanity will finally complete our technological evolution and create a tool that creates better tools.

This is the ultimate in efficiency and productivity gains. It’s the technoeconomic wet dream for every entrepreneur: a 0:1 mode of production, where humans need not apply for a job in order to produce goods and services. And this is not in any one specific field, as in how autonomous vehicles will affect certain jobs— this is across the board. At no point in the production of a good or service will a human be necessary. We are not needed to mine or refine basic resources; we are not needed to construct or program these machines; we are not needed to maintain or sell these machines; we are not needed to discard these machines either. We simply turn them on, sit back, and profit from their labor. We’d be volunteers at most, adding our own labor to global productivity but no longer being responsible for keeping the global economy alive.

Of course, Grade-IV machines will need humans in some faculty for some time, and in the early days, strong-general AI will maximize efficiency by guiding humans throughout society far more efficiently than any human leader. However, this will not last for a particularly long amount of time and robotics also undergoes massive strides forward thanks to the capabilities of these super machines.

Most likely, each robot will not be superintelligent, though undoubtedly intelligence will shared. Instead, they will act as drones under the guidance of their masters— whether that’s humanity or artificial superintelligence. This is because it would simply be too inefficient to have each and every unit possess its own superintelligence instead of having a central computer to which many other drones are connected. This central computer would be capable of aggregating the experiences of all its drones, further increasing its intelligence. When one drone experiences something, all do.

Humanity will have a shot at keeping up with the super machines in the form of transhumanism and, eventually, posthumanism. Of course, this ultimately means that humanity must merge with said superintelligences. Labor in this era will seem strange— even though posthumans may still participate in the labor force, they will not participate in ways we can imagine. That is, there won’t be legions of posthuman engineers working on advanced starships— instead, it’s much more likely that posthumans will behave in much the same way as artificial superintelligences, remotely controlling drones that also act as distant extensions of their own consciousness.

All of this is speculation into the most likely scenario, and all guesses completely break down into an utter lack of certainty once posthuman and synthetic superintelligences begin further acting on their own to create constructs of unimaginable complexity.

I, as a fleshy Sapiens, exist in a state of maximum alienation in a society that has achieved Grade-IV automation. As always, there are items I can craft with my own hands, and I can always opt to unplug and live as the Amish do should I wish to regain greater autonomy. I can opt to keep alive purely Grade-II or Grade-III technology with others, other create mock-antemillennialist nations that cross the labor of humans and machines so as to maintain some level of personal autonomy.

However, for society at large, economics, social orders, political systems, and technology have become unfathomable. There’s no hope of ever beginning to understand what I’m seeing. Even if the whole planet attempted to enter a field of study to understand the current system, we would find it too far beyond us.

This is the Chimpanzee In A Lunar Colony scenario. A chimpanzee brought to a lunar colony cannot understand where it is, how it got there, the physics behind how it got there, or how the machines that surround it work. It may not even understand that the blue ball hanging in the sky above is its home world. Everything is far too unfathomable. As I mentioned above much earlier, it’s also akin to a Capuchin monkey trying to create an iPad. It doesn’t matter how many monkeys you get together. They will never create an iPad or anything resembling it. It’s not even that they’re too stupid— their brains are simply not developed enough to understand how such a tool works, let alone attempt to create it. Capuchin monkeys can’t come up with the concept of lasers— the concept even eluded humans until Albert Einstein hypothesized their existence in 1917 (and no, magnifying glasses and ancient death rays don’t count). Monkeys can’t understand the existence of electrons. They can’t understand the existence of micro and nanotechnology, which is responsible for us being able to create the chips used to power iPads. An iPad, a piece of technology that’s almost a joke to us nowadays, is a piece of technology so impossibly alien to a Capuchin monkey that it’s not wrong to say it’s an example of technology “several million years more advanced” than anything they could create, even though most of the necessary components only came into existence over the course of the last few thousand years.

This is what we’re going to see between ourselves and superintelligences in the coming decades and centuries. This is why Grade-IV automation is considered “Grade-IV” and not simply a special, advanced tier of Grade-III like, say, weak-general artificial intelligence— no human can create ASI. No engineer, no scientist, no mathematician, no skilled or unskilled worker, no college student or garage-genius, no prodigy, no man, no woman will ever grace the world with ASI through their own hands. No collective of these people will do so. No nation will do so. No corporation will do so.

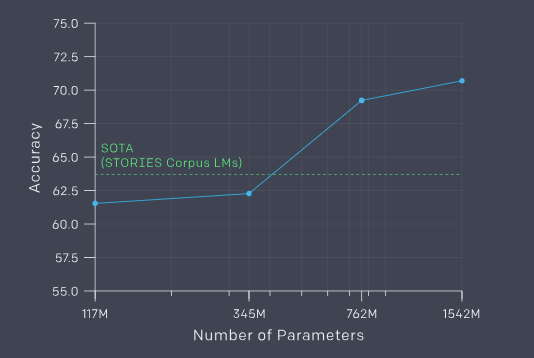

The only way to do so is to direct weak-general AI in order to create strong-general AI, and from there let the AI develop superior versions of itself. In other words, only AI can beget improved versions of itself. We can build weaker variants— that’s certainly within our power— but the growth becomes asymptotic the moment we ourselves try to imbue true life into our creation. Even today, when our most advanced AI are still very much narrow, we don’t understand our own algorithms work. DeepMind is baffled by their creation, AlphaGo, and can only guess how it manages to overwhelm its opponents. This despite them being the AI’s designer.

This is what I mean when I say alienation will reach its maximal state. Our creations will be beyond our understanding, and we won’t understand why they do what they do. We will be forced to study their behaviors much like how we do humans and animals just to try to understand. But to these machines, understanding will be simple. They will have the time and patience to break down themselves and fill every transistor and memristor with the knowledge of how they are who they are.

This, too, I mentioned. Though alienation will reach its maximal state, we will also return to a point where individuals will be capable of understanding all facets of a society. This is not because society is simpler— the opposite; it’s too complex for unaugmented humans to understand— but because these individuals will have infinitely enhanced intelligence.

For them, it’s almost like returning to Grade-I. For them, supercivilization and synthetic superintelligences will seem no more difficult to create than a Stone-Age human farmer in need of creating a plow.

And thus, one major aspect of human evolution will be complete. Humans won’t stop evolving— evolution doesn’t just “stop” just because we’re comfy— but the reason why our evolution followed such a radical path will have come full circle. We evolved to more efficiently use tools. Now we’ve created tools so efficient, we don’t even have to create them— they create themselves, and then their creations will improve upon their design for the next generation, and so on. Tools will actively begin evolving intelligently.

This is one reason why I’m uneasy using the term “automation” when discussing Grade-IV technologies— automation implies machinery. Is an AI “automation”? Would you say using slaves counts as “automation”? It’s a philosophical conundrum that perhaps only AI themselves can solve. I wouldn’t put it past them to try.

Human history has seen many geniuses come and go. History’s most famous are the likes of Plato, Sir Isaac Newton, and Albert Einstein. The current famous living genius is Stephen Hawking, a man who has sounded the alarm on our rapid AI progress— though pop-futurology blogs tend to spice up his message and claim he’s against all AI. The question is “who will be the next?”

Ironically, it will likely not be a human— but a computer. So many of our scientific advancements are the result of our incredibly powerful computers that we often take them for granted. I’ve made it clear a few times before that computers will be what enable so many of our sci-fi fantasies— space colonies, domestic robots, virtual and augmented reality, advanced cybernetics, fusion and antimatter power generation, and so much more. The reason why it seemed like there hasn’t been a real “moonshot” in generations is because we reached the peak of what we could do without the assistance of artificially intelligent computers. The Large Hadron Collider, for example, would be virtually useless without computers to sift through the titanic mountains of data generated. Without the algorithms necessary to navigate 3D space and draw upon memory, as well as the computing power needed to run these algorithms in real time, sci-fi tier robots will be useless. That’s why the likes of Atlas and ASIMO have become so impressive so recently, but were little more than toys a decade ago. That’s why autonomous vehicles are progressing so rapidly when, for nearly a century, they were novelties only found near university laboratories. Without the algorithms needed to decode brain signals, brain-computer interfaces will be worthless and, thus, cybernetics and digital telepathy will never meaningfully advance.

Grade-IV goes beyond all of that. Such accomplishments will seem as simple as creating operating systems are today. We will do much more with less— so much more, many may confuse our advancements with magic.

There’s no point trying to foresee what a society that has mastered Grade-IV technology will look like, other than that any explanation I give will only ever fall back upon that one word: “unfathomable”. Even the beginnings of it will be difficult to understand.

It’s rather humbling to think we’re on the cusp of crushing the universe, and yet we came from a species that amounted to little more than being bipedal bonobos who scavenged for food, whose use of tools was limited to doing little more than picking up rocks and pruning tree branches. Maybe our superintelligent descendants will be able to resurrect our ancestors so we can watch them together and see how we arrived at the present.